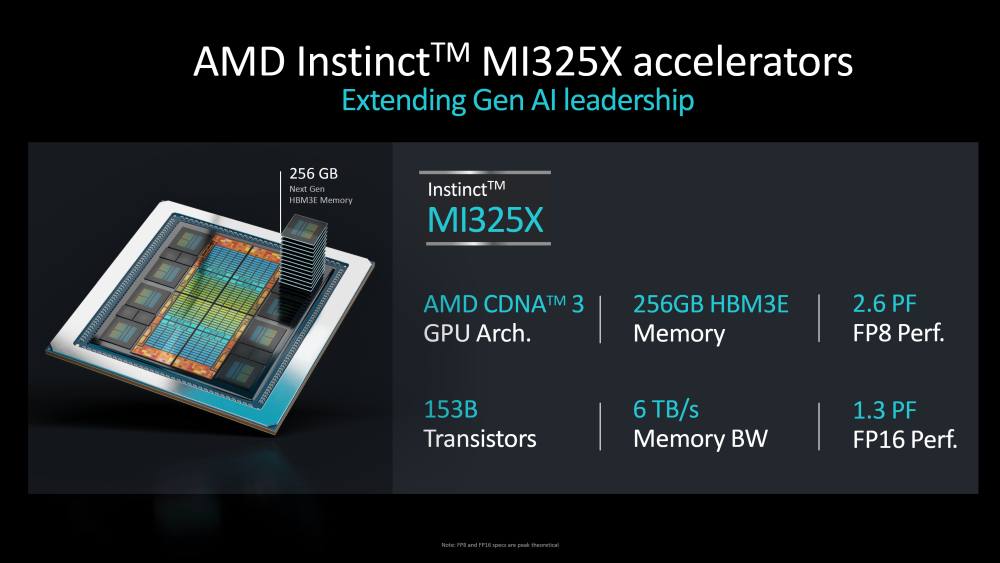

To start shipping in Q4 2024 and be available in Q1 2025 through select partners, the Instinct MI325X will be a part of the AI Instinct server that will pack up to eight MI325X GPUs, offering up to 2TB of HBM3E memory with 48TB of memory bandwidth, 896GB/s of infinity fabric bandwidth, and providing 20.8 PFLOPs and 10.4 PFLOPs of FP8 and FP16 compute performance, respectively. In case you are wondering, each GPU is configured at 1000W TDP.

Performance-wise and according to AMD's number, the Instinct MI325X AI accelerator should be up to 40 and 30 percent faster compared to the NVIDIA H200 in Mistral 8x7B and Mistral 7B, and up to 20 percent faster in MEta Llama 3.1 70B LLM. The results are pretty much the same when the MI325X platform is compared to NVIDIA's H200 HGX AI platform.

AMD was keen to note that the new platform will offer easy migration and an open ecosystem. When it comes to the software, the latest ROCm 6. ecosystem will bring up to 2.4x and 2.8x improvement in performance, AI workloads, and training.

AMD's Instinct is slowly gaining ground and already has support for some big players in the AI market, including Meta, Microsoft, and OpenAI. AMD also revealed its plans for the future, including the CDNA 4-based MI350 series of accelerators coming next year, and next-gen architecture MI400 series AI accelerators coming in 2026.