The 3D DRAM boasts built-in AI processing capabilities, allowing it to process and generate outputs without mathematical calculations. This advancement addresses the data bus bottleneck issues when massive amounts of data are transferred between memory and processors, enhancing AI performance and efficiency.

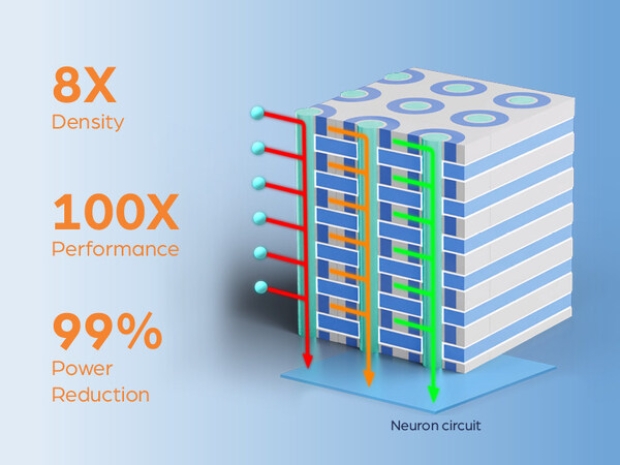

The 3D X-AI chip has a neuron circuit layer at its base, which processes data stored in the 300 memory layers on the same die.

According to NEO Semiconductor, this 3D memory offers a 100-fold performance improvement thanks to its 8,000 neutron circuits that handle AI processing in memory. It also boasts eight times more memory density than current HBMs and, more importantly, offers a 99 per cent power reduction by minimising the amount of data that needs to be processed in power-hungry GPUs.

NEO Semiconductor Founder & CEO Andy Hsu said current AI Chips waste significant amounts of performance and power due to architectural and technological inefficiencies.

“The current AI Chip architecture stores data in HBM and relies on a GPU to perform all calculations. This separated data storage and processing architecture makes the data bus an unavoidable performance bottleneck. Transferring huge amounts of data through the data bus causes limited performance and very high power consumption. 3D X-AI can perform AI processing in each HBM chip. This can drastically reduce the data transferred between HBM and GPU to improve performance and reduce power consumption dramatically," he said.

The company claims that the X-AI chip has a capacity of 128GB and can support 10 TB/s of AI processing per die. Stacking twelve dies together in a single HBM packaging could achieve more than 1.5TB storage capacity and 120 TB/s of processing throughput.

Intel, Kioxia, and TSMC have been working on optical technology to facilitate faster communications within the motherboard. By transferring some of the AI processing from the GPU to the HBM, NEO Semiconductor could help reduce the GPU's workload, making it far more efficient than current power-hungry AI accelerators.